I helped my 14-year old son with his homework today and there was a question about how to convert from Kilobytes (KB) to Megabytes (MB). My instinct was to tell him to divide by 1024 (the more technically accurate version of a KB) but we both decided the answer they wanted was 1,000.

In my work creating websites and web applications we sometimes report on filesizes, usually in human-readable formats such as reporting on the filesize in MB. For example, a document listing may include the filesize to give the user an idea of how long a download may take.

So this made me think about how we calculate human-readable versions of filesizes on websites. In the past we tend to divide bytes by (1024 * 1024) to get to MB. Now I wasn’t so sure. So I had a bit of a read around.

Binary and decimal units

Historically computers have always used binary units, since that’s how computers work. At their simplest level everything is either a 1 or a 0.

Traditionally a kilobyte is 1024 bytes, a megabyte is 1024 kilobytes, a gigabyte 1024 megabytes, and so on. This is called base 2 (or binary) since these numbers are all a power of 2 (1024 = 210 bytes).

As computers became more mainstream people naturally assumed a kilobyte meant 1000 bytes, a megabyte 1000 kilobytes, since base 10 (or decimal) is what we’re used to as humans.

So we currently have two ways to describe a kilobyte: decimal (1,000 bytes) or binary (1,024 bytes).

Messy real world definitions

There seems to be a lot of confusion in computing with developers often using the “more accurate” binary unit to calculate file sizes and others using the decimal unit.

In the early days of the web most computers used binary units to report filesizes. This has changed over time.

It turns out hard drive manufacturers refer to storage sizes using the decimal format. So a 100 MB hard drive is actually 100 * 1000 KB (rather than 100 * 1024 KB). This results in a smaller storage space than if you used the binary unit to calculate storage size (e.g. 1 GB = 1,000,000,000 bytes in decimal or 1,073,741,824 bytes in binary, this is around 7% smaller). Good for sales, less good for the consumer.

There’s even a Wikipedia page on the confusion this has created. Interestingly this notes that the US legal system has decided “1 GB = 1,000,000,000 bytes (the decimal definition) rather than the binary definition.”

There are also standards. IEC 80000-13, published in 2008, defines a kibibyte (or KiB) as 1024 bytes and a kilobyte (KB) as 1000 bytes.

According to the Institute of Electrical and Electronics Engineers (IEEE) the decimal format should be used as standard unless noted in a case-by-case basis (see Historical Context on this NIST reference page). This is also known as SI, The International System of Units, which defines the prefix killo as 1,000.

So technically you should write KiB if you mean 1024 bytes. But it turns out very few people do this, and everyone just sticks to kilobytes or KB whether they mean decimal or binary.

So today we’re still stuck with some people using KB = 1024 bytes and some people using KB = 1000 bytes. Yay!

However, clearly most people don’t care. And storage sizes are so large now most people don’t really notice the differences. Unless you’re a computer or web engineer who has to do calculations on this sort of thing.

What do modern operating systems use?

Well, here’s where it gets interesting.

In my early days of web development (which started around 1999) I used a Windows PC, these days I use a Mac. While hard drives advertised their size in decimal units, Windows itself reported filesizes in binary. So in practical terms a 1 GB hard drive actually had less space for file storage on it (around 953 MB available space). I remember that annoying me!

In the early days of Macs and smartphones they also reported filesizes in binary units. So it made sense that most people used binary units to report filesizes on web apps.

From 2009 Mac switched to reporting file sizes in decimal (with Mac OS X Snow Leopard, presumably in response to the IEC standard). This didn’t happen until 2017 for iOS and Android.

Today Ubuntu Linux, Mac OS, iOS and Android use decimal for file storage sizes. Windows, as far as I’m aware, still uses binary units. However, to spice things up Microsoft’s cloud office service 365 uses decimal units when referring to cloud storage size!

So today if you have a file which is 500,000 bytes in size this would report as 488 KB (binary) on Windows and 500 KB (decimal) on Macs, Ubuntu Linux and modern smartphones.

What works for users?

Which is right? To be honest, I don’t think that matters. What’s more important is which makes more sense for your users.

Most web development resources still tell you to use a binary units to convert between file storage sizes (e.g. bytes to KB).

But as you can see, almost everyone else uses decimal units in the real world (except for Windows OS – but even Microsoft uses decimal for their cross-platform 365 service).

When building web applications it’s always best to do what is best for your users. So now, most of the time I think it makes more sense to report filesizes using decimal units rather than binary (so 1,000 bytes = 1 KB). Which is the opposite to what I thought before I started writing this post!

Just to make things fun, other measurements which use kilobytes actually do use binary units consistently, computer memory (or RAM) being the obvious example. As far as I know every system out there uses binary units for measuring memory!

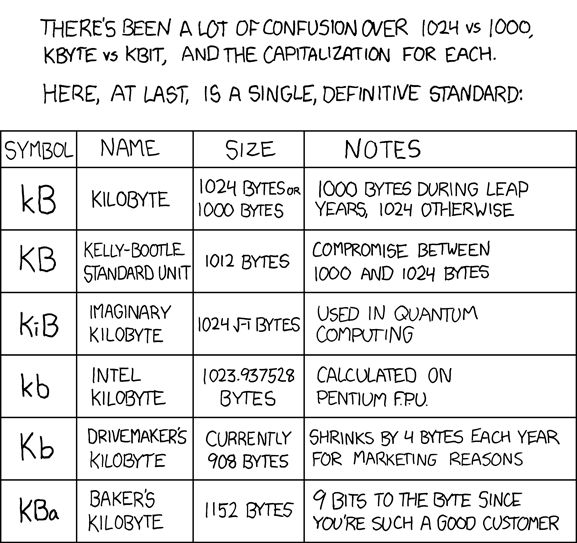

If this is all too much, I’ll leave you with the excellent xkcd web comic, kilobyte edition: